Understanding Generative AI as a Beginner: From the Basics to GANs

Understand the basic principles of Gen AI, the difference between discriminative and generative models and how GANs and transformer models work.

Ever since the hype surrounding ChatGPT and Co, everyone has been talking about Generative AI. Based on a short prompt, machines generate new content — writing texts, painting pictures or developing program code. Now, for example, the latest marketing product launched by Salesforce — Marketing Cloud Growth — has added further Einstein features based on Gen AI. In addition to the benefits that such tools based on Gen AI bring, it would now be important as a society to discuss how we want such tools to be integrated into our society and to what extent.

In this article, I explain the basic principles of Gen AI, the difference between discriminative models and generative models, how GANs and transformer models basically work.

What is Generative AI and NLP?

Generative Artificial Intelligence (Gen AI) refers to machine learning models that can generate new content based on data that the models have already seen. Examples include generating text based on a question from a user, creating images based on prompt information or writing code to help with development.

Generative AI has become known to the public since the Transformer architecture has been used in natural language processing (NLP). Generative AI models in the NLP field use machine learning techniques to learn from huge amounts of text data. Examples are GPT or BERT. These models use neural networks to understand the context of a text. Based on this, the model generates new content

How do such models work?

In simple terms, you enter a prompt, the model processes this prompt and returns an answer.

The input can be a question in text form, but in some models (like ChatGPT) it can also be a PDF or an image. The output is then an answer to your question or, for example, a processed image, a composed piece of music, etc.

Positioning of Gen AI in the world of machine learning

There are two broad categories of models in the world of machine learning:

Discriminative models aim to recognize patterns in the available data and make well-founded predictions. For example, you train a model to classify a certain image as a dog or to predict the amount of electricity consumed based on the input data.

Generative models, on the other hand, not only analyze the data but are also able to generate new data or content that does not come directly from the training data. The models “imagine” examples that appear realistic and credible. Generative models learn to model the underlying probability distributions of data sets in order to create new, similar data. The most important models are Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs) and transformer models such as GPT.

How do GANs (Generative Adversarial Networks) work?

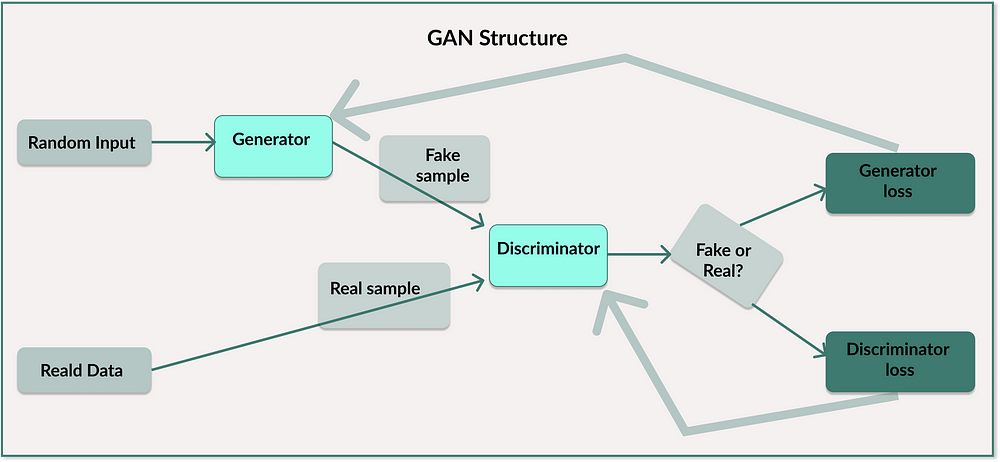

GANs are useful in those areas where we want to generate realistic data.GANs consist of two competing neural networks — the generator and the discriminator. These two networks play a game against each other, leading to increasingly realistic results:

The generator creates fake data that should look as realistic as possible. In doing so, it tries to fool the discriminator. The discriminator should believe that the generated data is real.

The discriminator evaluates both real data from the training data set and the fake data generated by the generator. The aim is for the discriminator to be able to distinguish between real and fake data.

This competition between the two networks goes on and on. The generator gets better at generating more realistic data, while the discriminator gets better at distinguishing between real and fake data.

How does the training of GANs work?

In the training process, both the discriminator and the generator are trained individually. The discriminator is first trained with real data from the training data set, whereby it learns to classify this data as ‘real’. It is then confronted with fake data generated by the generator. The aim here is for the discriminator to classify this fake data as ‘fake’. The generator is then trained using backpropagation. It now attempts to generate data that looks as similar as possible to the real data set to fool the discriminator. These two processes are repeated, with both networks constantly learning against each other.

GANs use special loss functions for both networks, whereby the combined loss function is often described as minimax loss. The generator network attempts to maximize the probability that the discriminator classifies the data produced by the generator as real. The discriminator network, on the other hand, tries to maximize the probability that it correctly classifies real data as ‘real’ and generated data as ‘fake’. I won’t go into the math behind this in this article, but you can find it here in an article from Neptune.AI — Understanding GAN Loss Functions.

How do transformer models such as GPT or BERT work?

Transformer models such as GPT (Generative Pre-trained Transfomer) or BERT (Bidirectional Encoder Representations from Transformers) are based on the Transformer architecture. This architecture has become known for its ability to efficiently process and understand large amounts of text data.

GPT uses an autoregressive model. This means that it predicts the next token (word or character) based on the previous tokens. It generates text sequentially, working from left to right. In a first step, a GPT model is pre-trained on huge text datasets to develop a general understanding of the language. In the fine-tuning phase, the model is then adapted to specific tasks to learn specialized knowledge. GPT works from left to right (unidirectional), whereby the context of a word is only extracted from the previous words.

Unlike GPT, BERT takes into account the context of a word from both the preceding and following words (bidirectional). This aspect makes BERT particularly good at capturing finer nuances in texts. Training is done using a technique called ‘Masked Language Model’, where random words in a sentence are masked. The model must then predict the masked words based on the context before and after these words. In simple terms, it’s like when we had to fill in a cloze at school. This general learning is followed by fine-tuning the model.

Where can you continue learning?

Generative AI Concepts — DataCamp-Course (first part is free)

Generative Adversarial Networks — Paper from Ian Goodfellow et al.

Attention is all you need — Paper from Ashish Vaswani et al. about Transformers

Final Thoughts

Generative AI will continue to grow in the coming years. Companies such as Salesforce are already integrating many Gen AI features into their products. Not all of them are yet at the stage where they are useful. However, it would be important to discuss as a society how we want such tools to be integrated into our society and to what extent.