A Practical Dive into NumPy and Pandas: Data Analysis with Python

Learn the background to numpy and pandas during your coffee break.

If you’re using Python for data analysis or data science, you’ll quickly encounter the commands ‘import numpy as np’ and ‘import pandas as pd’. When I first started using Python for data analysis a few years ago, as a beginner I found it challenging to know which libraries were suitable for what and where to start. I had previously mainly used the R programming language for data analysis, while I used Python more for application development. Python libraries are collections of modules that provide reusable code and make programming easier and much more efficient with pre-built functions and classes. In this 8-minute article, you will find an introduction to the two best-known Python libraries for data science and data analysis to get a basic understanding of NumPy and Pandas.

Most renowned Python libraries, like Pandas, Numpy, Scipy, and Matplotlib, are open-source. To install a library, simply use the command ‘pip install NAMEOFTHELIBRARY’ in your terminal. But better it is, to install the necessary packages for a project within a specific environment to avoid dependency conflicts. If you’re unsure how to set up a dedicated environment, check out the guide in one of my recent articles, Python Data Analysis Ecosystem — A Beginner’s Roadmap.

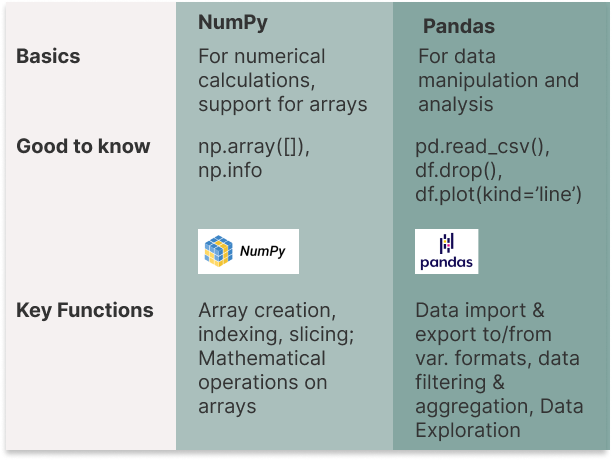

How to Understand the Basics of NumPy and Pandas

NumPy

NumPy is one of the most basic libraries for numerical calculations. If you want to do data science with Python, you need to know the basics of NumPy. The library offers support for large, multidimensional arrays and matrices. NumPy is very efficient. NumPy achieves high efficiency by utilizing C or Fortran languages for internal computations, coupled with its effective memory management. You can import the library with “import numpy as np”.

What are arrays?

Arrays are similar to lists in Python. NumPy arrays allow you to perform operations on large amounts of data. The advantage of NumPy arrays is that they can perform vectorized operations (simultaneous execution).

NumPy operations via arrays

By using NumPy, you don’t need explicit loops (for-loop), which leads to a significant increase in performance. You will notice this difference, especially with large amounts of data.

In this code example, we first create 2 large arrays and then add the individual elements. You don’t need any loops. As a result, we only output the first 10 elements (1+6=7, 2+7=9, 3+8=11, 4+9=13, 5+10=15, etc.).

#NumPy operations via arrays

import numpy as np

# Creating two large NumPy arrays

a = np.array([1, 2, 3, 4, 5] * 100000)

b = np.array([6, 7, 8, 9, 10] * 100000)

# Performing a vectorized operation without explicit loops

result = a + b # Element-wise addition

# Displaying a portion of the result

result_slice = result[:10] # Only show the first 10 elementsArray indexing and slicing

You can also access and manipulate parts of an array. This works in a similar way to Python lists — but with NumPy, you have extended options for multidimensional arrays. With array indexing, you can access individual elements or a group of elements in an array.

In the following code example, we first create a two-dimensional NumPy array (3x3 matrix with three rows and three columns). We then access the element in the second row and the third column ([1, 2]), which returns 6. We then access all elements in the first row and then all elements in the second column.

# Array indexing with NumPy

import numpy as np

# Creating a two-dimensional array

array_2d = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

# Accessing a specific element, e.g., the element in the second row and third column

specific_element = array_2d[1, 2] # Should return 6

# Accessing an entire row, e.g., the first row

row = array_2d[0, :] # Should return [1, 2, 3]

# Accessing an entire column, e.g., the second column

column = array_2d[:, 1] # Should return [2, 5, 8]With array slicing, you can cut out part of an array. In the next code example, we cut out the first two rows and the middle two columns and save the elements in a new array. If you want to be on the safe side, it’s best to create a copy of the sub-array. If you don’t make a copy and modify an element in the sub-array, the element in the original array will also be modified. We then use the colon to first extract all elements in the second column and then all elements in the second row.

# Array slicing with NumPy

import numpy as np

# Creating a two-dimensional array

array_2d = np.array([[1, 2, 3, 4], [5, 6, 7, 8], [9, 10, 11, 12]])

# Slicing to extract a sub-array

# Selecting rows 1 to 2 and columns 1 to 3

sub_array = array_2d[0:2, 1:3].copy() # This will select [[2, 3], [6, 7]]

# Slicing to extract all elements from the second column

second_column = array_2d[:, 1] # Selecting every row, but only the second column

# Slicing to extract a specific row

second_row = array_2d[1, :] # Selecting the second row and all columns

sub_array, second_column, second_rowYou will need these two techniques in particular if you are working with large data sets. This allows you to extract partial areas from your array without having to copy the entire array.

NumPy functions

You can use the mathematical functions to perform statistical and mathematical operations directly on arrays. In data analysis, for example, you need this to quickly understand the distribution of the data by calculating the mean value, standard deviation, or maximum/minimum.

# Numpy functions

import numpy as np

# Creating an array

data = np.array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

# Mathematical functions

mean_value = np.mean(data) # Calculating the mean

max_value = np.max(data) # Finding the maximum value

std_dev = np.std(data) # Calculating the standard deviation

mean_value, max_value, std_dev,Through Boolean indexing, you can select or alter elements that meet a specific condition. It functions like a filter, which isolates values exceeding or falling below a certain threshold, for instance. In the provided code example, we retrieve all values exceeding 5.

# Boolean indexing with NumPy

import numpy as np

# Creating an array

data = np.array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

# Boolean indexing

condition = data > 5 # Creating a boolean condition to filter values greater than 5

filtered_data = data[condition] # Applying the condition to the array

filtered_dataResources to continue learning

Pandas

This library was developed for data manipulation and analysis. Use the “import pandas as pd” command for the import. You can then import your data from an Excel file or a CSV file. Pandas also offer many functions for data manipulation. For example, you can filter the data with df.query(), sort the data with df.sort_values(), perform a grouping with df.groupy(), or aggregate the data with df.agg(). “df” is the name of your data frame.

Dataframe as a central data structure

This data structure facilitates a tabular representation of data, organized into rows and columns. You can envision this structure as akin to an SQL table or an Excel spreadsheet. Each column is capable of holding distinct data types.

In the code example, we first create 2 simple DataFrames. Usually, you create your DataFrame directly with imported data (e.g. with data from an Excel file). We then combine the two DataFrames with concat(). With groupby() we group the combined element according to the “Key” column.

# Using DataFrames with Pandas

import pandas as pd

# Creating two simple DataFrames

df1 = pd.DataFrame({'Key': ['A', 'B', 'C', 'D'],

'Value': [1, 2, 3, 4]})

df2 = pd.DataFrame({'Key': ['B', 'D', 'A', 'E'],

'Value': [5, 6, 7, 8]})

# Combining the DataFrames

combined_df = pd.concat([df1, df2])

# Grouping the combined DataFrame by 'Key' and summing the values

grouped_df = combined_df.groupby('Key').sum().reset_index()Data exploration

To perform an exploratory data analysis, the first step is typically to import your data. With Pandas, you can import various file formats such as CSV, Excel, or JSON files. If you have saved the data in a database, you must first establish a connection to the database and then read your data from the database. You can see an example of an SQL database in the code snippet.

# Reading your data with Pandas

# Importing pandas library

import pandas as pd

# Example code snippets to import files of various formats using pandas

# Importing a CSV file

csv_df = pd.read_csv('path/to/your/file.csv')

# Importing an Excel file

excel_df = pd.read_excel('path/to/your/file.xlsx')

# Importing a JSON file

json_df = pd.read_json('path/to/your/file.json')

# Importing data from a SQL database

# For this example, you need to have a SQL connection established.

# sql_connection is a placeholder for the actual connection.

# sql_df = pd.read_sql('SELECT * FROM your_table', con=sql_connection)To gain an initial understanding of the data, you can output the first and last lines with head() and tail(). With describe() you can display the most important key figures and identify outliers. You can use the info() method to display the data types of each column or the number of non-zero values. In my latest article, you find 9 important steps for carrying out an exploratory data analysis. There you can see these commands with examples.

Data visualization

You can also use Pandas to directly display simple plots to quickly identify patterns, trends, and anomalies in the data. With the following code, you can directly output a line plot, a bar plot, a histogram, or a box plot.

# Creating Plots with Pandas

import pandas as pd

import matplotlib.pyplot as plt

# Importing a CSV file

df = pd.read_csv('path/to/your/file.csv')

# Line plot

df.plot(kind='line')

plt.title('Line Plot')

plt.xlabel('Index')

plt.ylabel('Values')

plt.show()

# Bar plot

df.plot(kind='bar')

plt.title('Bar Plot')

plt.xlabel('Index')

plt.ylabel('Values')

plt.show()

# Histogram

df.plot(kind='hist', alpha=0.5)

plt.title('Histogram')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.show()

# Box plot

df.plot(kind='box')

plt.title('Box Plot')

plt.ylabel('Values')

plt.show()Resources to continue learning

Conclusion

Other libraries that you will often encounter at the beginning are Matplotlib, Plotly, Statsmodels, and Scikit-learn. Matplotlib and Plotly are particularly useful for creating visualizations (including interactive ones). Statsmodel is mainly needed to perform various statistical models, tests, and data exploration. And if you want to get started with machine learning, it’s best to start with Scikit-learn.

I’m sure you’re already familiar with the names PyTorch and TensorFlow. These are the two most renowned libraries used in machine learning, particularly in deep learning. PyTorch, developed by Facebook’s AI research group, is especially popular in the research community. On the other hand, TensorFlow, created by Google, is known for its robust capabilities in deep learning. An introduction to these two libraries will follow in another article.